@wednesdayayay Great thoughts – I have a lot of ideas like this for image analysis across multiple frames (longer temporal constant.) Another constant CV value it would be nice to have is angle of movement, amount of motion across the frame, etc.

this in real-time

a system like the ffmpeg filters that can be cascaded/chained/routed would be awesome

angle of movement sounds rad too

this is maybe a little off topic maybe not

paint by numbers

I was recently painting a backdrop for our production of winnie the pooh

the way we typically do this is project onto the flats trace then some shading then paint over it in whatever the desired style is

what I would like to do simply is

chroma key out several major colors (maybe just RGB since we tend to only buy RG&B plaint and mix ourselves anyway)

under each color would be an oscillator (static image is just fine)

maybe Red would be full horizontal Green would be between horizontal and vertical and Blue would be vertical

this seems doable in a system with 3 oscillators, 3 polar fringes, 3 doorways and a visual cortex

is this too ridiculous of a use case?

scrolling bang

with the additional scrolling options that diver and memory palace open up perhaps it would be interesting to derive triggers/gates/bangs/go messages for scripting from a settable threshold for NESW faces around the outside of the viewing screen and allowing these triggers etc. to be assigned wherever

this could really open up some self playing/wiping

picture in picture

picture in picture being a bit easier would be very nice but would require more than one RGB path on the module, multiple modules or a 3 channel grayscale PIP workflow which would still be fun and elevating I feel.

thinking back i seem to remember the sampler module being talked about as a video collage module so maybe some similar territory is covered

color pick and switch

something like the polar fringe chroma keying where in addition to picking the color you want to pluck out or around you also pick the color that goes in there instead

“typing this out loud” made me realize that polar fringe and color chords would cover this but it would be neat to have it as a simple programmable & reusable module so we can that could be stacked for a larger re-colorization patch

Quadrants

perhaps even something where you could assign quadrants of the screen ( I seem to remember someone mentioning this a while back and I’ve got some of my own experiments going that I haven’t written about yet in this territory) tasks

if we could write conditional statements based on the location (within the quadrant) of a color blob meeting a specific requirement for size and color that would be pretty neat

automatic and or guided drawing could be neat the more I look into this application the more I see spaces where memory palace should be able to function similarly I think

but having it be scriptable rather than more about the physical patching could be very interesting

I’m going to dive into this application and get some more concrete suggestions

piggy backing on @luix post above from that same website

some kind of CRTifying would be great

I believe something that allows for ASCIIing live video with a live keyboard input for swapping in and out letters (like muting and and unmuting that “letter channel”) would take the idea further into some new territory

I think there was an old-school Quicktime demo that did this; source code and all. I’d guess from the 2004 or so era, but maybe earlier.

so I really enjoy watching the processing tutorial video from here

and he just recently released a video about a new application called runway that I think anyone interested in this machine learning area may want to take a look at

that being said it is helping me focus what I want to see from the video synth

spade coco

so one of the models (spade coco) allows for you do import or draw with certain colors that have been trained for so dark blue might be ocean and green might be grass

you draw (using a built in basic drawing app) or import an image and then it is translated into a picture you can see what I drew and the result

the idea that we could potentially have something drawing images soley based on the colors present in our LZX patches opens up some very interesting possibilities

tracking

I think the skeleton / face tracking would be very useful too if we could key either the resulting XY space from face detection input or the skeleton out of the input so that it could be composited in somewhere else

it would be a lot of fun to take the resulting “skeleton key” through doorway and get it nice and soft then mix that back in with the original through a visual cortex/marble index

spade landscapes

so there is another model (spade landscapes) which takes text input and outputs a picture

“the sun is on fire a bucket of fish and ten little boys”

obviously if you make an effort to work within the confines described models then you will get a more “pleasing” output

I’m not sure how to make this work with LZX just yet…

although there is another model that takes an input image and outputs text…

we could create the google translate of video synths

create an image to be described textually

then create an image from that text

rinse repeat

so I just tried the im2txt model on that same image I generated above

and it gave me

“a fire hydrant that is on a sidewalk .”

sending it back through the original model

then I got

a red fire hydrant sitting on the side of a road .

then it got stuck in a loop

so the tasty aspect here would be keying in video synth stuff with the images thus throwing things off a bit so that we don’t end up getting stuck in a loop

dense depth

there is a great model (dense depth) which predictably gives a grayscale output that represents the depth of the input

I can only image patching in depth in this way combined with a dual cortex/projector setup

red/blue the outputs of cortex 1/2 slightly offset (projectors) in the X axis and we should be able to make some 3d video synth…

I have only played around in runway for an hour or so and it has been great fun

I’m a big fan of the Monome Teletype and utility modules like Ornament & Crime… Having something like that for video - something that could respond to (and output) CV and have processing-like animation powers could be great.

That sounds really cool.

What kind of funtions/primitives would you envisage it having?

The type of primitives I would expect are some high level basic building blocks a-la-cadet or like in any LZX Expendition reference card the block-diagram. I.e. Navigator a mixer/inverter, a rotator, a gama function etc.

or Arch some analog functions.

LZX has not said anything like this but thats what I would expect, remember we will be probably be doing a mix of VHDL (for defining some physical connections on the FPGA) and C++. Or maybe its possible to use an even higher level programming language like Python.

Im very excited to get started with it

I see that lzxindustries GitHub account has forked the nodeeditor so maybe something on that direction

Cant wait to see whats on the oven

Its gonna be amazing for sure

As a less-technically minded lzx user, I use touchdesigner a lot with LZX, and I know other folks do. I’ve found it the most accessible and most ‘open’ system from my perspective, and pairs well with LZX already.

Pros:

Free to use

It uses python, (and glsl if you want) so guess that interfaces with Pynq, and you can approach TD in a script-y way as well as a node way.

Lots of easy realtime I/O options already ready to go - cv, osc, audio, midi, Kinect, laser, projection mapping, all sorts.

Lots of basic functions already there - (eg. perlin, simplex and other noises for VanTa above; image analysis across multiple frames is accessible for a noob like me to do in it already and one of the things I do with it, or more fancy motion detection). Also lots of free ‘nodes’ already made by people to do other stuff, eg. import OpenGL shaders, create mandelbulbs, whatever.

Mac and PC compatible (though Mac being non-NVIDIA now means its the weaker option for some things)

Lots of existing community and development stuff to connect to for future possibilities.

My use cases/problems solved/imaginary possibilities:

- I pipe stuff from TD into LZX and back into TD, with blackmagics in between. I’ve used it to create complex realtime sources in TD from sound modulation/osc or whatever, and send these (alongside cv impulses/lfos for timings) to LZX (eg. I use it as a noise generator looping into CV with lzx, but obv TD’s noise output is a video not cv). And I’ve sent LZX back into TD, to process LZX images/cv impulses to do things like make nice ramp patterns into 3d shapes or become part of more complex graphic outputs. The possibilities for me are about moving from 2D into 3D rendering in realtime, for general complexity, and being able to interact through osc, Kinect etc.

- I’m starting to explore machine learning stuff as more accessible tools for this appear (including via TD), this is a big growing area, and expect this would be a primary use case by the time this module was ready for the light of day.

The problems solved are basically that it would make things more self-contained in the rack, and more immediate/performable, than going back and forth to the laptop, but offer more creative openness and complexity than exists right now. I didn’t get an erogenous tones structure because it’s use of shaders as outputs seemed a bit basic and limited compared to what I could already do with TD and a circuit of cvs/blackmagics.

I’d like to see the ability to take a TD project made on a laptop and load it into a module’s bank of projects/patches, having set the project’s cv inputs and outputs and video in/outputs to match the module’s cv/video in/out so I can then perform it as a patch within my LZX patch. I guess the alternate option to putting TD into a module, as suggested above, is something like a video-rate-conversion expert sleepers to facilitate more laptop-lzx communication.

Wednesdayyayay, there’s a simple TD ASCII noise tutorial here where you can swap in symbols as whatever you like. You could pipe video in rather than noise in to it too: https://youtu.be/uTXZJrtjGV8

oh good lord

this is wonderful

I’ve installed TD on my computer twice but never really sunk into it

I love the idea of a video rate conversion expert sleepers type thing

I don’t have much to add at this point but am so excited to see how this gets pushed forward

I found this TD tutorial great as my first ever dive into it to get my head around it: https://youtu.be/wmM1lCWtn6o and from there the Matthew Ragan (youtube) tutorials are really great intros, and this past 12 months or so several folks are now doing great youtube channels for specific techniques. The most video-synth-esque is maybe Paketa12, who does some astonishing things using only TOP(‘2d image’)-based analysis.

So on the TD/Video Tools discussion, Eric Souther has a recent tutorial series covering the topic of artist tool development which he shares within a TD learning environment. So it may be of use/interest to many of you. Link to part 1:

I use the combo extensively, one thing I love to do is one particle in TD per ‘pixel’ of my LZX output.

If you want to get into shaders and GLSL in TD, my course is a good starting point: https://thenodeinstitute.org/courses/vanta-glsl-shader-in-touchdesigner/

I’d like to second @wednesdayayay re: shbobo shnth & fish. If you’re not familiar shnth is a little reprogrammable wooden handheld synth - has some buttons, bars, antennae - all of which are assignable to different sounds, movements whatever.

Like you can program it so that when you press button 1 it triggers an envelope, or a horn, or any number of things. It’d be really Ace to have voltage control I/O that’s fully programmable to route to wherever & whatever.

I reckon the community would build alot of the “modules” within the software environment should the skeleton exist.

Also second whomever mentioned a long sample storage situation. Would be amazing to build a sampler

You’re all aware of Erogenous Tones’ Structure right?? It’s basically a tiny (4 nodes at a time) version of TouchDesigner in a module. CV, audio, and MIDI controllable GLSL and python with composite or LZX RGB video capture built in. (Edit: oops, I didn’t scroll back far enough, but they recently added python)

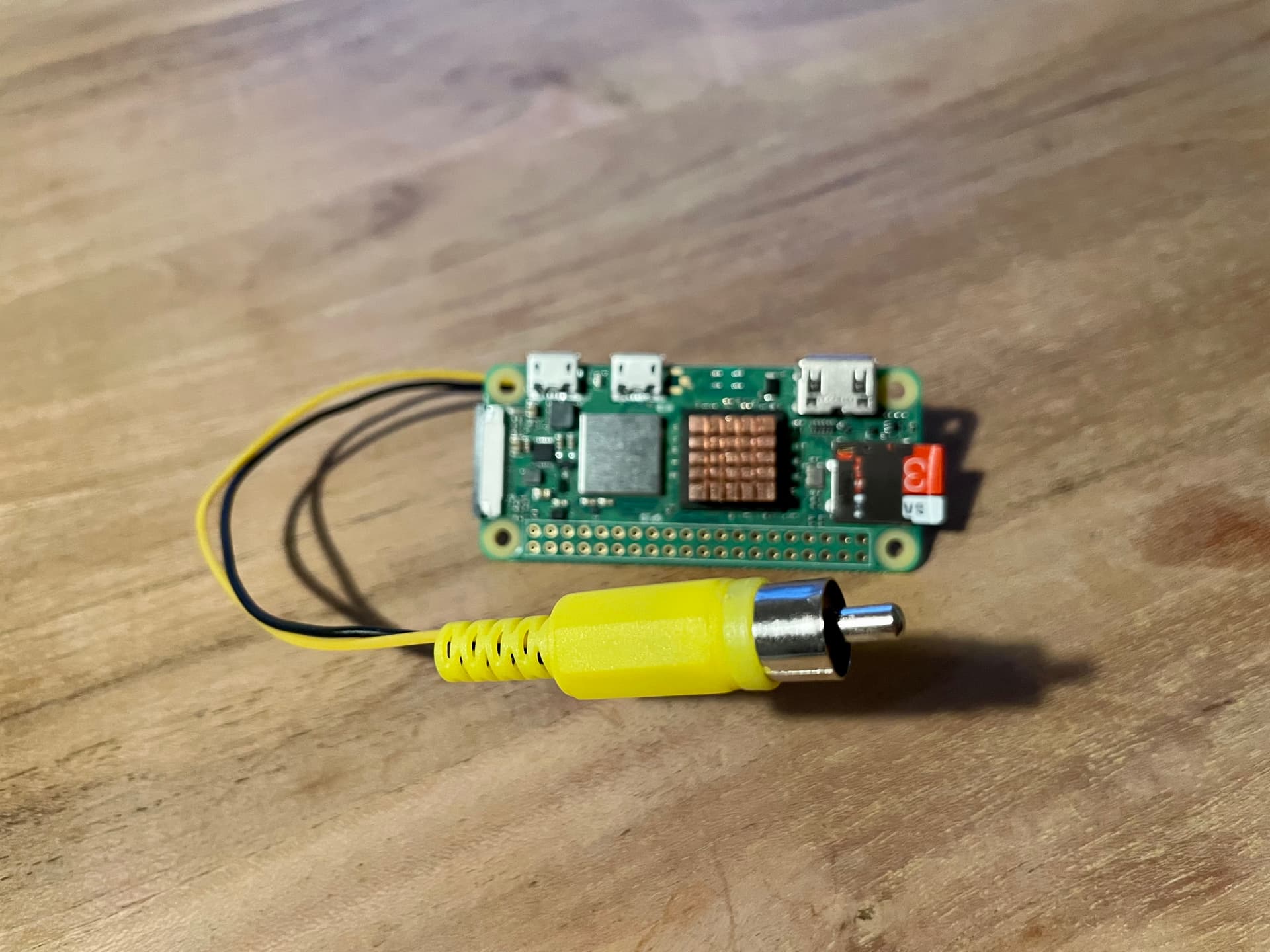

The Raspberry Pi Zero 2 W makes a perfect scriptable video module! Tiny and budget friendly (for $20 you have all you need). I like to use simple shaders as a randomness/texture/gradient input and this is a great tool.

You definitely can’t beat the Pi in terms of generating video independently at low cost! The main limitation for our use case is that there’s no real way to genlock the output timing such that you could synchronize to an external video reference. So, for example, you can’t just mix the output of two Pis together passively – you need a separate device (video mixer) to do it. So that inhibits how far it can be taken in a modular, analog patching context.

can you point me in the direction on how to get it to output those patterns?

This might be a good 1st Pi project for me

thanks in advance! martijn